Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🍎 Teens spend over 1 hour on phones during school: Your teen is likely distracted in the classroom, according to a new study published in JAMA Pediatrics. Researchers found that half of teens (ages 13–18) use their smartphones for at least 66 minutes during school hours, and 25% logged in for more than two hours. While some teens use their phones for research or schoolwork, the majority of students used social media and messaging apps. (Gotta maintain that Snapstreak, after all.) This isn’t ideal for a bunch of reasons ranging from lost learning to missed opportunities to socialize with peers. Some schools are implementing phone bans during class hours, but if yours hasn’t, here are a few options: use parental controls to limit screen time and notifications during school hours, and talk to your child about why it’s important to limit their phone use at school. If they struggle with focus or forget assignments, keeping their phone off at school is an easy first step.

⚖️ Kids Off Social Media Act advances out of committee: There’s a new child safety bill on the block. The Kids Off Social Media Act (KOSMA) — a bipartisan bill that would ban kids under 13 from social media — was approved by the Senate Commerce Committee, setting it up for consideration by the full Senate. The bill builds on existing platform policies, as most social media companies already set their minimum age at 13. If passed, the bill would require social media platforms to enforce age verification and mandate that federally funded schools block access to social media on school networks and devices. The bill is gaining traction at a time when more people are becoming aware of social media’s negative effects on adolescents; a recent study by Sapien Labs links smartphone usage to increased aggression, hallucinations, and detachment from reality among teens, and 13-year-olds are experiencing more severe mental health issues compared to 17-year-olds — possibly because they received their phones at younger ages.

🙅 Most teens don’t trust AI or Big Tech: Adolescents feel sus about generative AI like ChatGPT and DeepSeek, according to a new research brief from Common Sense Media. Over a third of teens say they’ve been misled by fake content online, including AI-generated content (aka deepfakes). Over half (53%) of teens don’t think major tech companies will make ethical and responsible design decisions, either. They’re also aware that Big Tech tends to prioritize profits over safety: a majority (64%) don’t trust companies to care about their mental health and well-being, and 62% don’t think companies will protect their safety if it hurts profits. With the rise of misinformation and AI-generated content, now is a good time to check in with your teen about how to spot deepfakes and verify online information before they share it.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Back in high school, you probably learned the importance of citing reliable sources and distinguishing credible information from unreliable ones. Today’s kids face the same challenge, but in a digital world filled with AI-generated deepfakes and misinformation. Knowing how to evaluate online sources is an essential skill. Here are some conversation starters to help your child think critically about what they see online.

💕 Valentine’s Day is this Friday! If you’re looking for family-friendly movies to watch with your teen or tween, check out this roundup. We’ll take any excuse to rewatch Say Anything.

📱 Sharing content about your kids online is tempting, but “sharenting” has its downsides, too. We’re sitting with the lessons from this article on Psychology Today.

⏳ Curious about whatever’s happening with TikTok? The ban has been delayed for at least 75 days, but still needs an American buyer. YouTube personality MrBeast, the CEO of Roblox, and Microsoft are just some of the names that are eyeing a TikTok bid to keep it in the US.

🧐 In honor of Safer Internet Day, UNICEF debunks four myths about children’s online safety that are worth a read.

Delulu? Skibidi? Kids today are speaking a language all their own. These words, phrases, and acronyms might pop up in text messages and everyday conversations, but what do they even mean? Here’s your field guide to common teen and tween phrases, from Gen Alpha terms to Gen Z sayings.

Ate: Praise or admiration for someone’s actions, choices, or performance. For example, “she ate” in reference to a friend’s outfit means that she looks great.

Bussin: Something extremely good or excellent, such as food.

Cooked: A state of being in danger or doomed. This term is often used facetiously. For example, if your teen is anxious about the consequences of not doing their chores, they might say, “I didn’t put the dishes away like Mom asked. I’m cooked.”

Delulu: Short for “delusional.” It describes someone with unrealistic beliefs or optimistic expectations.

GOAT: This stands for the Greatest Of All Time. If someone describes you as the GOAT, it’s a compliment.

High key: Something is intense or over-the-top. High key (also styled “highkey”) can also mean “really” or “very much,” as in “She highkey wants you to ask her to the dance.”

Igh: Synonymous with “alright,” “I guess,” or “fine.”

It’s giving: Used to describe when something is giving a certain feeling or vibe. You might describe someone’s outfit as, “It’s giving Billie Eilish.”

Kms/kys: Acronyms that stand for “kill myself” and “kill yourself.” While it may be intended as a spot of dark humor, if you see kys in your child’s texts, treat it seriously.

Left no crumbs: Someone did something extremely well or perfectly. This phrase is often paired with “ate.” For example, the sentence “She ate and left no crumbs” might refer to someone’s perfect performance in a play.

Low key: Restrained, chill, or modest. Low key (or “lowkey”) is the opposite of high key. If someone is going to a low-key party, it’ll be a chill hangout.

Menty b: Short for “mental breakdown.” Menty b is meant to be a humorous way to describe someone’s feelings during periods of high stress.

Mew/mewing: The practice of placing your tongue on the roof of your mouth to improve jawline aesthetics.“Mewing” is a Gen Alpha trend that involves putting a finger to their lips like they’re shushing someone, then running their finger along their jawline. A tween might mew in response to a question — they can’t answer because they’re working on their jawline. (This one is a little convoluted.)

Ngl: This stands for “not gonna lie.” Someone might use this if they’re going to share their honest opinion about a topic.

Ohio: Used to describe someone as weird or cringey.

Ong: Expresses strong belief, intense emotion, or honesty. For example, if someone is concerned about nearly missing their final, they might text, “I slept through my alarm ong.”

Skibidi: This term can mean cool, bad, or dumb, depending on what it’s paired with. “Skibidi Ohio” means someone is really weird, while “skibidi rizz” means they’re good at flirting. The term comes from a YouTube series called Skibidi Toilet, in which toilets with human heads battle humanoids with electronics as heads. What a world.

Sigma: Means good, cool, or the best at something. Conversely, “what the sigma” doesn’t really mean anything — it’s another way of saying “what the heck.”

Smfh: Short for “shaking my f**king head.” It’s used to refer to something disappointing or upsetting.

Smth: An abbreviation for “something.”

Stan: To enthusiastically support something or someone.

Tea: Gossip or secrets. The phrase “spill the tea” means that someone wants to hear the latest juicy information.

Mid: Something is average, low-quality, or middle-of-the-road.

If you feel like you’re having your own menty b after trying to understand Gen Z sayings, we’re lowkey right there with you. But you’re not cooked, parents. Kids have glommed onto different words, phrases, and even emojis as part of their cultural consciousness for generations (see: on fleek, basic, cool beans, and feels). Staying informed and trying to translate your child’s messages are part of the experience of being a parent.

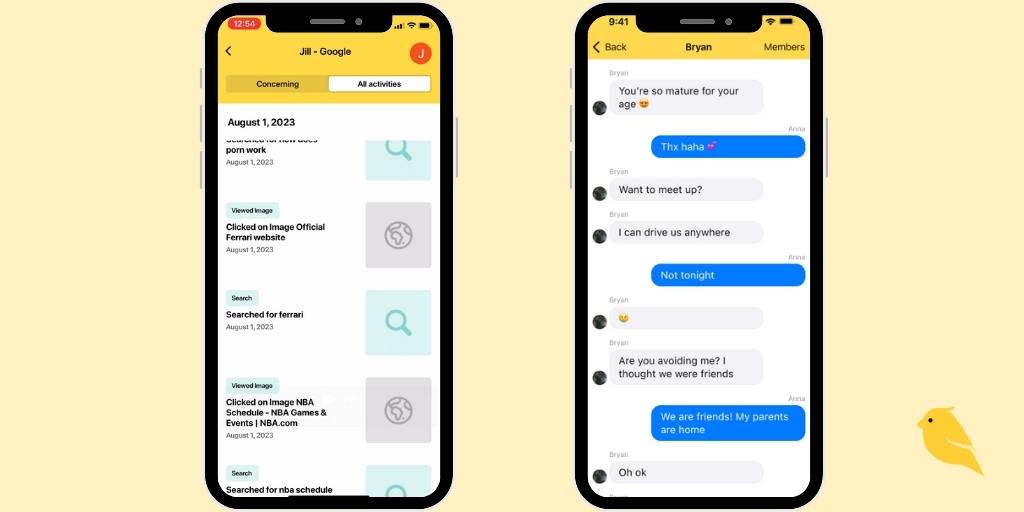

For extra help monitoring what matters — your child’s safety — there’s BrightCanary. The app uses advanced AI technology to monitor your child’s text messages, social media, and searches and flags concerning content, including explicit material, harassment, and drug references. Download on the App Store today to get started for free.

If you’re a parent of a tween or teen, you’re no stranger to unusual acronyms and slang. But while some terms are harmless, others are cause for concern. “KYS” is one example — the term is a red flag if your child sends or receives it. But what does KYS mean, and how should you talk to your child about it?

“KYS” stands for “kill yourself.” The term is used to make fun of someone after they do something embarrassing, or it can be used as a form of harassment.

The meaning largely depends on context, but the effect can be hurtful either way. For example, if your teen posts a video of them playing the guitar, a stranger might comment KYS to taunt them. In other instances, a bully might repeatedly text KYS to their victim with the intent to cause them mental or emotional harm.

If you or someone you know is thinking about attempting suicide, please call the toll-free, 24/7 National Suicide Prevention Lifeline at 1-800-273-8255.

KYS is a form of internet slang that has been around since the early 2000s, where it was used in places like message boards and forums. Today, kids communicate through social media and text messages — so acronyms like KYS have entered texts and inboxes.

KYS appears in places young adult males tend to frequent, like Reddit, Discord, and Steam. But the term can be used by anyone; it can be found on social media platforms such as Instagram and TikTok, as well as texts sent by cyberbullies.

Even if KYS is sent as a joke, it makes light of suicide and should be treated seriously. If you’re monitoring your child’s text messages and see this acronym in their threads, here’s what to do:

One of the more difficult things to communicate to kids is that even their jokes can have consequences. If they know what KYS means, they should also know that joking about suicide isn’t cool. And if you see the term pop up in their messages, take a moment to step in and have a conversation about it. Sure, it might be nothing — or it might not. To take a proactive approach to monitoring your child’s online activity and messages, start with BrightCanary. Download on the App Store today and start your free trial.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🤖 Your teen has probably asked AI to solve for X: A new Pew Research Center poll asked US teens ages 13–17 whether they’ve used ChatGPT for schoolwork. Twenty-six percent said they had, double the number from two years ago. Teens in 11th and 12th grade were more likely than seventh and eighth graders to use AI as their study buddy (31% vs. 20%).

While some applications of AI can be helpful, like outlining a paper or identifying typos, it gets problematic when kids are using the technology to do their homework for them. Twenty-nine percent said it’s acceptable to use ChatGPT for math problems, even though a recent study found that the AI can only answer questions slightly more accurately than a person guessing. And, of course, when the robots write your papers for you, you don’t learn how to effectively write a compelling argument. On our blog, we covered tips to manage the potential downsides of ChatGPT and how to talk to your kiddo about it.

📲 Most kids ages 11–15 have a social media account: According to an analysis of a national sample of early adolescents in the US, a majority (65.9%) of kids have a social media account — even though social platforms say their minimum age is 13. In fact, under-13 social media users had an average of 3.38 social media accounts (mostly TikTok). Notably, just 6.3% of participants said they had a secret social media account hidden from their parents. We listen and we don’t judge, but social media isn’t great for younger kids — it can expose them to addictive algorithms, problematic content, and online harassment, among other concerns. If your child has a social profile, we recommend monitoring. Here’s how to do it.

😠 Social media is making us grumpier: A study published earlier this month investigated the relationship between social media use and irritability — aka feeling grumpy or feeling more bothered by things and people more than usual. Frequent use of social media was associated with significantly higher levels of irritability, especially for people who posted often. The findings were based on adults, but it’s worth considering how frequent social media use can impact your already-moody teens and tweens. Our advice: help your child replace constant social media use with better, more constructive ways to spend their leisure time, ideally away from screens. Save these tips to help your child make stronger offline friendships.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Believe it or not, we’ve reached the end of January. In terms of our digital lives, the end of the month is a great time to reflect on what went right, what didn’t, and where we can grow in the coming month. Here are some thought-starters to bring to your next roundtable with your child.

Beta testing? Cuffing? Teen dating slang is its own language. If your teen is starting to show interest in dating and relationships, here’s what all those terms (and weird emojis) mean.

Roughly 55% of kids ages 13 to 17 use Snapchat, and about half say they use the platform daily. While Snapchat offers fun features like face filters and easy ways to connect with friends, there are also hidden risks every parent should know about. Here are 10 bad things about Snapchat and how to navigate them for your child’s safety.

😊 Did you know that there’s a science behind making yourself happier? Some steps include valuing time over material possessions, expressing gratitude, and, yes, giving up social media for extended periods of time. Check out the full writeup via Parenting Translator.

⚖️ “Social media platforms are not neutral bystanders; they actively design systems that promote engagement at any cost, even if it means exposing children to harmful content. We urge Congress to prioritize this legislation — it’s a matter of life and death.” Read Laura Berman and Samuel Chapman’s op-ed about why we need social media regulation.

💻 In today’s day and age, how do you teach kids to be “good at the Internet”? Big fans of this Romper essay by Rebecca Ackermann.

Snapchat is one of the most popular apps among teens. Roughly 55% of kids ages 13 to 17 use Snapchat, and about half say they use the platform daily. While Snapchat offers fun features like face filters and easy ways to connect with friends, there are also hidden risks every parent should know about. Here are 10 bad things about Snapchat and how to navigate them for your child’s safety.

Snapchat’s disappearing messages are designed to vanish after they’ve been viewed or expired (after 24 hours). The problem is that vanishing messages can hide all sorts of concerning content, ranging from explicit messages to online harassment. And because they disappear off your child’s device, they’re difficult to track down and use as evidence of wrongdoing.

Disappearing messages can also encourage your child (and their friends) to engage in risky behavior, like sending inappropriate pictures. But just because something seems private doesn’t mean it is. Screenshots or third-party apps can still save Snaps without the sender’s knowledge or permission.

Did you know? BrightCanary monitors what your child types in all the apps they use, including Snapchat messages.

The Snapchat Snap Map (say that three times fast) allows people to see a user’s real-time location. This feature is disabled for teen accounts by default, but if it’s enabled, friends can use it to track your teen’s whereabouts.

Location sharing might be helpful if you’re a parent trying to track down your teen to pick them up after an event, but it’s concerning if your teen accepts friend requests from people they don’t actually know in real life. Even among people they do know, location sharing can expose kids to stalking risks and unintended privacy breaches.

For example, if your teen wants to hang out with a certain friend but not the other, the Snap Map might expose their location — and lead to some difficult conversations among their friend group.

Similar to TikTok and Instagram, Snapchat also has a curated collection of short video content from various publishers, creators, and news sources called “Discover.” Users can also view “Stories” on different topics. These features are personalized based on your interests viewing habits, but these features can also expose kids to adult content, including sexual or violent material.

On Reddit, parents have complained about the explicit material shown on Snapchat’s Discover feed. “I just don’t think a company should be running hog wild with sexual imagery and highly politicized or controversial articles/voices when they have minors that are on the app,” one user wrote.

While parents can report and block certain types of content from appearing, there isn’t a way to reliably set content filters around Snapchat’s Discover or Stories features.

Anonymity can encourage people to behave in ways they normally wouldn’t in real life — including harassing others through group chats and disappearing messages on Snapchat. The platform’s anonymous nature can expose your child to cyberbullying on social media, especially if they accept friend requests from people they don’t know.

Snapchat’s most recent transparency report underscores the scale of the problem. In 2024, the platform reported 6.5 million instances of harassment and bullying. Of those, just 36.5% were enforced by Snap, which means that a majority of reports went unaddressed.

Snap streaks are one of the ways Snapchat gamifies the user experience. A Snapstreak refers to the number of consecutive days two users send each other Snaps (pictures or videos). The streak expires if both users fail to send a Snap within a 24-hour window.

They might sound fun, but Snapstreaks can also lead to obsessive behavior and increased screen time, especially if your teen has streaks running with more than one friend. Maintaining a streak gives you social credibility, and a teen’s personality may even be influenced by the number of streaks they have going.

As if teens need more peer pressure in their lives, right?

The fear of missing out (FOMO) refers to a feeling of anxiety about being excluded from other friend groups or missing out on something more fun happening elsewhere. Remember, social media is a highlight reel — if your teen is constantly seeing their friends posting about going to exciting places, hanging out with people, and buying certain items, they might feel like their own life is boring or less-than in comparison.

FOMO isn’t unique to Snapchat, but the platform’s culture rewards people who are chronically online. That visibility can give your teen more insight into what their peers are doing around the clock, which may negatively impact their own sense of self-worth — especially if that’s all they consume online.

Users can easily receive friend requests or messages from unknown people, increasing the risk of dangers like grooming, harassment, and access to drugs.

In the past year, Snapchat has made efforts to improve teen safety by preventing teens from interacting with strangers. New teen safeguards have made it more difficult for strangers to find teens by not allowing them to show up in search results unless they have several mutual friends or are existing phone contacts.

However, those changes aren’t foolproof — it’s still possible for people to connect with strangers on Snapchat, especially if your child fibbed about their age when they signed up for their account.

In 2022, the Drug Enforcement Agency named Snapchat as one of the platforms which drug dealers are using to peddle illicit substances, which can be laced with deadly amounts of fentanyl. Across the country, the families of victims are suing Snapchat and campaigning for stricter regulations.

Snapchat has historically been used for illegal activities, and the platform is struggling to keep up with the scale of the problem. In 2024, the platform reported approximately 452,000 instances of drug content and accounts, but Snap enforced just 4.1% of the total reports.

Snapchat’s gamified features, like Snap Scores and Snapstreaks, are designed to maximize engagement on the platform. This isn’t unique for social platforms, but it’s especially problematic when the majority of users on Snapchat are between the ages of 15 to 25 — an age group that is developmentally prone to impulsive behaviors.

Without appropriate boundaries and screen time limits, it’s relatively easy for young people to excessively use Snapchat. And that’s already a trend — according to Pew Research Center, 13% of teens use Snapchat almost constantly, compared to 12% on Instagram and 16% on TikTok.

Snapchat recently improved its parental control settings, dubbed Family Center. Now, parents can see their child’s friend list and who they’ve contacted most recently, and they can more easily report suspicious behavior. However, Snapchat comes up short in a few key aspects: parents aren’t able to view what their teens are messaging, and there are no content filters to prevent kids from accessing inappropriate material.

Not every parent and child will need to have message monitoring. But parents should have the option to do so if they need it.

Some of the concerns with Snapchat, like location sharing and stranger danger, are also risks with other social media apps. But Snapchat’s vanishing message feature is particularly concerning, as well as its comparative lack of parental controls and content filters. So, is Snapchat safe for kids? It depends on how it’s used and how closely you’re able to supervise.

We recommend having a conversation with your child about the risks that are inherent with Snapchat. There’s nothing wrong with having them hold off on getting Snapchat. If you do decide to let them Snap, walk through their privacy settings together, set up Snapchat Family Center, and reiterate your expectations — for example, they’re only allowed to talk to a limited number of contacts, and they have to consent to periodic phone checks during the week.

If you set device rules, we recommend putting them down in writing with a digital device contract.

Snapchat is a popular app among teens, but it’s not really designed with the best interest of minors in mind. It’s important for parents to stay involved if they allow their kids to use Snapchat. Monitor their activity, set boundaries, and use parental control tools.

Snapchat has risks, including privacy concerns and exposure to harmful content. Parents should actively monitor their child’s activity if they allow Snapchat.

Set up Snapchat Family Center, use the strictest privacy settings, turn off Snap Map, and encourage your child to only accept friend requests from people they know in real life. If anyone makes them feel uncomfortable online, talk to them about how to handle it.

Instagram is a popular alternative with similar Snapchat features and stronger parental controls. Other messaging app alternatives include Messenger Kids and iMessage with BrightCanary monitoring.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

😅 Meta is getting rid of fact checkers. What that means for parents and kids: Meta, the parent company of Facebook and Instagram, recently announced that it is getting rid of its third-party fact-checking program. Meta CEO Mark Zuckerberg acknowledged that, as a result, the company will catch less “bad stuff” posted on its platforms — which is a red flag, considering that roughly 60% of kids in the US use Instagram alone. The company is switching to a “Community Notes” model, similar to X. However, the new policy means your child is more likely to see potentially incendiary content, such as hate speech and misinformation, especially if they like or share a post on their feed. If your child uses Insta, here’s what we recommend:

🔒 New social media and children’s device laws officially kick in: Although the Kids Online Safety Act fell flat last year, several major social media bills have taken effect at the state level. In Florida, children under 14 are no longer allowed to use social media, and minors aged 14 and 15 can only use it with parental consent. In Utah, SB 104 prevents children from accessing obscene material via Internet browsers or search engines. And, notably, most of the South can no longer access pornography websites due to pushback from age verification laws (which the Supreme Court will weigh in on this week).

⏳ Let’s talk about the impending TikTok ban: TikTok is on the clock. No, that’s not a Kesha lyric. The popular social media platform will shut down in the U.S. by Sunday, Jan. 19 if the ban is upheld. As a recap, TikTok was put on the chopping block last year due to national security concerns. TikTok’s parent company, ByteDance, has until the 19th to sell the app’s U.S. operations to an American owner or shut down entirely. At that point, TikTok will be removed from App Stores and won’t be available for new downloads. No, your phone won’t self-destruct: the app will likely still work on your phone for a little while, even after the 19th.

The reasons for the ban are controversial; peer-reviewed research suggests that TikTok is being used to shape the West’s public opinion of China. At the same time, TikTok’s many faults can also be applied to other popular social media platforms (have you seen Meta’s terms of service?). Incoming President Trump has signaled that he plans to prevent a full TikTok ban once he takes office next week, so this is still a very much developing story. We’ll keep you posted.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Did you know that the BrightCanary app includes an AI chatbot designed specifically for parents? It’s called Ask the Canary, and it’s designed to answer your toughest parenting questions 24/7. One of our writers ran some situations by the AI — you can read the full writeup on our blog. And if you want to try Ask the Canary yourself, just download BrightCanary on the App Store!

Question: “I got an alert on BrightCanary that people are sending hurtful texts to my child. What are some conversation starters I can use to talk to them about it?”

Ask the Canary’s response: “I noticed some of your friends have said hurtful things in your text messages. How does that make you feel?”

Question: “My child is posting inappropriate things online. What are some conversation starters I can use to talk to them about it?”

Ask the Canary’s response: “I noticed something that worried me in your posts. What was your intention when you posted this? How do you think these posts align with the values we cherish as a family?”

Question: “I want to know more about a show my child is watching. What are some conversation starters I can use to ask them about it?”

Ask the Canary’s response: “Can you tell me about the show you’re watching? How does this show compare to other shows you like?”

Writer Rebecca will never forget when her 10-year-old niece sent her first text. It was a simple “Hi,” but that word also signaled the start of a new chapter for her — and a whole new set of safety considerations for her parents. This is where a reliable app for parents to monitor text messages is essential.

Apple’s parental controls are impressive, but they don’t give you the ability to actually see what your child is texting. Other parental monitoring apps come up short on iPhone, but BrightCanary was specifically designed for Apple devices — so it actually works. Here’s what parents and family members should know about BrightCanary text monitoring, how to set it up, and tips to get the most of the app.

Ah, oversharing about your personal life online. We’ve all seen it, and most of us have done it a time or two as well. But when it comes to our kids, oversharing on social media can be particularly risky.

Kids may not be aware that they’re oversharing — they may simply want to talk about their experiences with their friends, without realizing that the information is public or can be easily screenshotted and shared. Here’s everything you need to know about the dangers of oversharing online and how to help your child avoid it.

🏛️ In California, a federal court upheld most of SB 976, also known as the Protecting Our Kids from Social Media Addiction Act. The bill prevents social media platforms from knowingly providing an addictive feed to minors without parental consent and takes effect on January 1, 2027. NetChoice, a powerful tech lobbying group, challenged the law on First Amendment grounds, and while the court partially blocked parts of the law, social media companies will still be expected to adjust their feeds for minors by 2027.

🎮 Is your child asking about the video game Marvel Rivals? The game is rated T for teens, but there’s a dearth of information about whether the game is appropriate for kids — so we wrote about it on our blog.

🤔 Want to be the most informed parent in the room? Subscribe to Parent Pixels and get this newsletter a day early!

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

🤳 How do teens use social media today? New findings from the Pew Research Center reveal that about one-third of teens are on social media almost constantly. Our takeaway is that — like it or not — social media is a social reality for a majority of US teens. It’s essential that parents stay involved, use parental controls, and talk to their kids about setting boundaries around the digital world. Here are a few highlights from the Pew report:

📱 Cell phones are fueling school fights: A review by the New York Times of more than 400 fight videos from schools across the country reveals a troubling trend: middle and high school students are using phones and social media to arrange, provoke, film, and share violent campus brawls. These videos often ignite new cycles of cyberbullying, verbal aggression, and physical violence, as students pressure peers to fight for the sake of creating shareable clips. Some districts are now facing negligence lawsuits from parents, while others have sued social media companies, claiming their “addictive” features drive compulsive student use and amplify the problem. “Cellphones and technology are the No. 1 source of soliciting fights, advertising fights, documenting — and almost glorifying — fights by students,” said Kelly Stewart, an assistant principal at a high school in Juneau, Alaska. “It is a huge issue.” Here’s how to spot and address cyberbullying on social media.

💊 About 60% of young people have seen drug content on social media: The marketing of illegal drugs on open platforms, such as social media and messaging apps like Telegram, has increased in recent years, according to a recent story in Wired. And although companies claim they remove millions of pieces of drug-related content each year, their efforts aren’t foolproof — dealers ran hundreds of paid ads on Meta platforms in 2024 to sell illegal substances. As much as 13% of social media posts may advertise illegal drugs, and among 13- to 18-year-olds, 10% have reported purchasing drugs via social media. Those exposed to drug ads were 17 times more likely to purchase drugs on social media compared to those who had not seen such ads. They’re common enough that young people can stumble upon them on TikTok and Instagram, even if they’re not actively looking. Parents, talk to your kids about the risks of drug content online, and save these tips on what to do if you find drug content on your child’s phone.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

The end of one year and the start of a new one is a great time to talk to your child about their goals and resolutions. Here are some conversation-starters to get them thinking about how they want to shift their relationship with technology in the new year.

Is your child being harassed via text? Here are the signs parents should know about cyberbullying through texting and how to support your child.

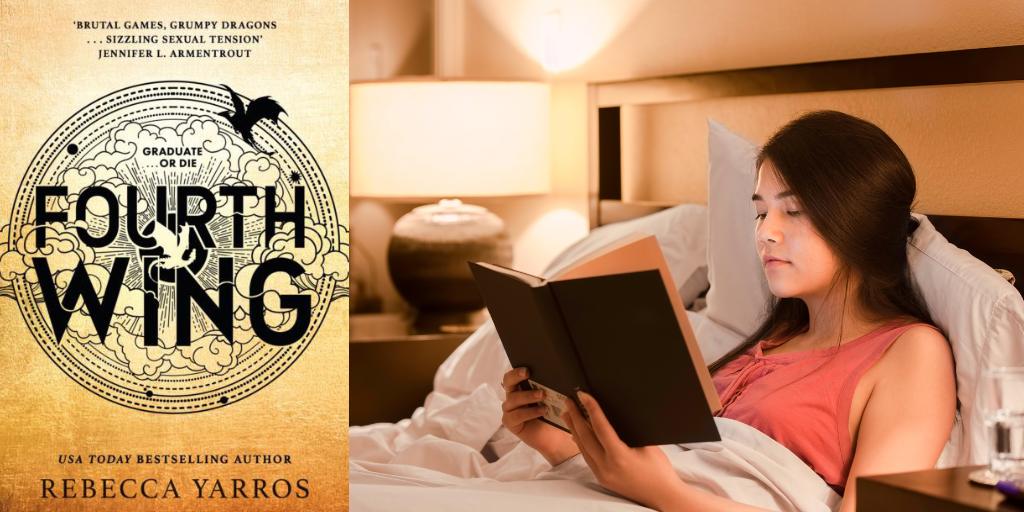

Fourth Wing is a fantasy book made popular, in part, by BookTok. The next installment in the series comes out in January, and it might be on your child’s radar. But what’s the age rating?

🎬 Looking for something to watch with your kids this season? Check out Common Sense Media’s list of the best movies of 2024.

🤓 Did you know? At the BrightCanary blog, we publish resources every week to help you keep up with the latest news and updates about digital parenting, monitoring, and more.

👋 Connect with us on social media: we’re on Instagram, Facebook, TikTok, Pinterest, and YouTube.

Fourth Wing is the first book in The Empyrean series by author Rebecca Yarros. The book was released in May 2023 and quickly rose to viral popularity on social media. The plot follows protagonist Violet, who fights for her life at Basgiath, a war college that trains dragon riders to protect their kingdom from the growing threat of war. If your child is interested in reading Fourth Wing, here’s what parents should know about the age rating, content, and more.

Fourth Wing is rated 16+ due to mature themes, including violence, language, and mature content. The novel is often described as romantacy (a mix of romance and fantasy genres), and the romance between the main characters plays a key role throughout the series.

Although the series is sometimes shelved in the Young Adult section, Fourth Wing is definitely not YA. There are explicit sex scenes, and violence is a major component of weeding out potential riders at Basgiath. For example, characters not only fall to their deaths, but are also assigned to fight each other each week — sometimes to deadly ends.

Want to make sure your child is searching for age-appropriate material online? BrightCanary helps you stay informed by monitoring texts, Google, YouTube, and social media. Try the app for free today.

There’s plenty of strong language in Fourth Wing, ranging from rampant profanity (the f-word, specifically) to descriptions of sexual acts from makeouts to intercourse. Violence is described with graphic language, including descriptions of characters having their throats ripped out, getting stabbed, and being burned to ash.

There is sex and nudity in Fourth Wing, although the first book differs from the second. In Fourth Wing, there are two extended sex scenes that occur late in the book. Some readers skip chapters 30 and 32 for this reason. There are also sexual encounters in the second book, Iron Flame, and the third, Onyx Storm.

Readers and reviews on social media will define books by their “spice” level, a term that refers to how much sexual content the book contains. Fourth Wing is often generally considered spicy, although less so than the A Court of Thorns and Roses series.

There’s plenty of violence in Fourth Wing, including violent fight scenes, poisoning, deaths when riders attempt to bond with their dragons, and more. In Iron Flame, torture is a significant plot point. Violence is one of the ways through which potential cadets are weeded out of Basgiath, so only the strongest remain.

It’s worth noting that Violet has a chronic illness, and Yarros takes care to showcase how she navigates the physical world with her condition, which affects her joints and mobility. Violence both serves the plot and impacts Violet’s character arc.

Characters occasionally drink alcohol and smoke a substance that produces a similar effect to cannabis, but these scenes aren’t major parts of the plot.

The young adult genre is generally defined for readers between the ages of 12–18. However, Fourth Wing is not a young adult book — it’s considered New Adult, which is intended for readers between the ages of 18 and 25. Your 14-year-old may not be ready for the violence, language, and sexual themes in the Fourth Wing series.

There are five total books planned in the Fourth Wing series. If your child has expressed any interest in romantacy books like ACOTAR, this series might be on their radar. As with any new piece of media, it’s worth talking to your child about the content they might encounter in Fourth Wing. It’s also a good idea to read the book with your child and decide if it’s appropriate for them.

Welcome to Parent Pixels, a parenting newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. This week:

📍 Instagram and Snapchat make location sharing easier: Meta now allows users to share their live location in Instagram DMs for up to 1 hour or pin a spot on the map for easy sharing. This feature is off by default, and only those in the specific chat can see the shared location. In very related news, Snapchat debuted location sharing through Family Center, the app’s parental control hub. Once set up, parents and teens can share their locations with each other. Parents can also see who their teen is sharing their location with and receive travel notifications when their teen arrives at places like school or home. It’s actually refreshing to see the apps include some safety considerations, like restricting location sharing to accepted friends only on Snapchat. However, location sharing still raises concerns, such as teens sharing their details in a group DM that might include strangers. Remind your teen to only share their location with people they know in real life and to always prioritize their privacy — now’s a good time to talk about when they might want to use this feature (like coordinating pickups after an event) and when they shouldn’t (like meeting up with someone they haven’t met in-person before).

🕳️ YouTube pushes eating disorder videos to young teens, report suggests: The Center for Countering Digital Hate (CCDH) found that YouTube’s algorithm recommended eating disorder content to minors, including videos that violated its own terms of service. Researchers created simulated 13-year-old users who watched eating disorder-related videos, and YouTube’s algorithm responded by serving more harmful content, such as an “anorexia boot camp” and other harmful content that had accrued an average of over 388,000 views each. YouTube failed to remove, age restrict, or label the majority of videos the CCDH researchers flagged as harmful, and even profited from ads placed next to the content. This rabbit hole of negative content isn’t exclusive to YouTube — it’s a risk on any platform using engagement metrics to recommend videos without factoring in age or safety. age. Parents, here’s how to talk to your kids about the risks of eating disorder content on video platforms and on social media.

😬 Guys, TikTok might actually get banned: A federal appeals court upheld the January 19 deadline for TikTok to be sold or face a ban in the United States. As a recap: earlier this year, President Joe Biden signed a law requiring ByteDance, TikTok’s parent company, to sell the app to an approved buyer due to national security concerns or face a ban. ByteDance had asked the Supreme Court to review the statute, but unless the Court intervenes, the ban will take effect as scheduled. If your child is asking about the ban, here are some helpful talking points.

Parent Pixels is a biweekly newsletter filled with practical advice, news, and resources to support you and your kids in the digital age. Want this newsletter delivered to your inbox a day early? Subscribe here.

Let’s talk about location sharing. On the one hand, it’s a helpful way to make sure your child gets where they need to go. On the other hand, without boundaries in place, it can make your child feel like they lack your trust. Here’s how to start a conversation with them about it.

A kid’s first phone is a big step, but with some proper planning, you’ll set them up for success by teaching healthy tech boundaries. Here’s what we recommend.

Most US teens use iPhones, which means it’s important to find a parental monitoring app that’s effective on Apple devices. Here are a few of the best options for parents in 2025.

🙈 A majority (62%) of social media influencers don’t verify information before sharing it with their audiences, highlighting their vulnerability to misinformation. If your child gets all their news and updates from influencers, this is your reminder to talk to them about digital literacy.

⌛ Most popular social platforms have a minimum age of 13, but 22% of 8–17-year-olds fib about their age on social media, according to a report from UK media regulatory company Ofcom. Although apps like Instagram and TikTok have safety measures in place for underage accounts, those go out the window if kids pretend to be adults online.

⚠️ A proposed bill in California would require social media platforms to display warning labels that cautioning users about their potential impact on youth mental health. This initiative echoes US Surgeon General Vivek Murthy’s proposal to include tobacco-like warning labels on social networks, aiming to raise awareness about the risks of prolonged exposure to these platforms.

⚖️ Despite bipartisan support, the Kids Online Safety Act is unlikely to pass this year, despite last-minute changes and an endorsement from Elon Musk on X.

It’s challenging to be a kid in today’s digital world. As many as 59% of teens have experienced cyberbullying, and 25% of teens think social media has a negative influence on people their age. Parental monitoring apps can help parents stay involved, protect their kids from online risks, and teach their kids how to use the internet safely. A majority of US teens use iPhones, which means it’s important to find the best parental monitoring app for iPhone that balances safety and independence. Here are a few of the best options for parents in 2025.

The benefit of a parental monitoring app is that it gives you a convenient and personalized way to learn more about your child’s online activities.

“Parental monitoring” is a little different from another term you may have heard, “parental controls.” We define parental controls as tools and settings that allow parents to set firm guardrails around their child’s internet use and access, such as screen time limits and website blocking. Parental monitoring refers to tools that allow parents to supervise what their kids see and send online.

To that end, the best iPhone monitoring apps for parents should give parents visibility into the apps their kids use the most.

Aura is an all-in-one platform that protects against identity theft and online threats. The service offers tiers based on your needs — the highest tier covers your entire family with identity, fraud, and child protection, while the lowest tier solely offers parental controls and child safety features.

Primarily an identity theft and fraud protection platform, it also allows parents to set website limits and restrictions, including popular platforms like YouTube and Discord. You can also set screen time limits, view reports on your child’s internet activity, and even temporarily disable internet access — helpful if your child has a tendency to scroll after dark. Aura is unique among parental monitoring apps for iPhone because it monitors online PC video games, alerting parents to threats like cyberbullying, scams, and predators.

Aura requires that you install an app on your child’s phone, and it doesn’t offer comprehensive parental monitoring across popular apps. For example, you can restrict Instagram, but you won’t get content alerts if your child gets a stranger in their direct messages. Aura also doesn’t monitor text messages on iPhone. Aura is best-suited for families who want a digital security solution that also monitors their child’s PC gaming.

Apple Screen Time is a free and robust suite of parental controls that are already loaded onto iOS. If you and your child have Apple devices, you can use Apple Screen Time to block apps and notifications during specific time periods (like school or bedtime), limit who your child can communicate with across phone calls, video calls, and messages, block inappropriate content, and — of course — set screen time limits.

However, Apple Screen Time doesn’t allow parents to monitor the content within specific apps. While Apple’s Communication Safety feature helps protect kids from sharing or receiving inappropriate photos and videos, that same protection doesn’t apply to other apps your child may use, like YouTube and Snapchat.

Apple Screen Time doesn’t require that you download anything on your child’s phone, but you will have to create an Apple ID for your child and add them to Family Sharing. This platform is best for families who want to set specific guardrails on their child’s device, such as purchase limits on the App Store and content restrictions. Because Apple Screen Time is free, we recommend that parents explore the settings and use it regardless of whatever other parental monitoring tools they use — it’s a good safety net for parents and kids on iPhones.

BrightCanary is one of the best parental monitoring apps for iPhone because it monitors what your child messages and sends across all their apps. Other apps only alert you when your child encounters something concerning, but BrightCanary gives you the option to view your child's latest keystrokes, as well as their emotional well-being, interests, and more.

The app works via a smart and secure keyboard, which you install on your child's device. BrightCanary's advanced AI monitors your child's activity, and updates in real-time with any concerning content alerts. For parents who want to monitor both sides of text conversations, images, and deleted texts, they can subscribe to Text Message Plus within the app.

The app includes a chatbot called Ask the Canary, which gives you helpful parenting guidance and conversation-starters 24/7. With a high rating in the App Store, parents have called BrightCanary the “best monitoring app I’ve found for iPhone.”

BrightCanary offer features like screen time limits or location sharing because those are freely available through Apple Screen Time. BrightCanary is ideal for parents who want to monitor the content of their child’s online activities, including text messaging.

OurPact can manage or block any iOS app, including messaging and social media apps, and set screen time limits based on the schedules you set. The app also offers geofencing alerts, so you’ll know when your kids arrive or leave locations like home or school. The Premium plan allows you to view automated screenshots of your child’s online activity, including texts, although you won’t receive alerts based on content monitoring.

Many of the app’s features are already freely available with Apple Screen Time, but OurPact’s well-designed interface may make these features more accessible for stressed-out parents.

Preference and accessibility are two big factors to consider when you’re weighing parental monitoring apps for iPhone. Some apps are on the higher end of the price range because you get access other features, like identity theft protection and location monitoring. Others have philosophical differences.

For example, BrightCanary is designed to encourage communication and independence; rather than blocking apps, BrightCanary gives parents visibility into what their kids are doing online, so they can talk about any red flags together. That insight, plus helpful summaries and AI-powered conversation starters, means that your child is learning how to use the internet safely with your support — rather than blocking everything until they graduate high school.

One key factor to consider is which apps you’ll be able to monitor on iOS. For example, Snapchat is a major concern for many parents, but most parental monitoring apps don’t include Snapchat on iOS. (You can monitor sent messages in Snapchat with BrightCanary, though.) If you have a specific concern in mind, double-check that the monitoring app covers it.

Apple has strict limitations on what third-party apps can access, so many parental monitoring apps are limited on what they can monitor in iOS. Some companies get around that by requiring that you install an extra app on your child’s device, but that can actually slow down your child’s phone and drain their battery over time. Weigh the pros and cons, have a frank conversation with your child about online safety, and go with the parental monitoring app for iPhone that works best for your family. You’ve got this, parent.